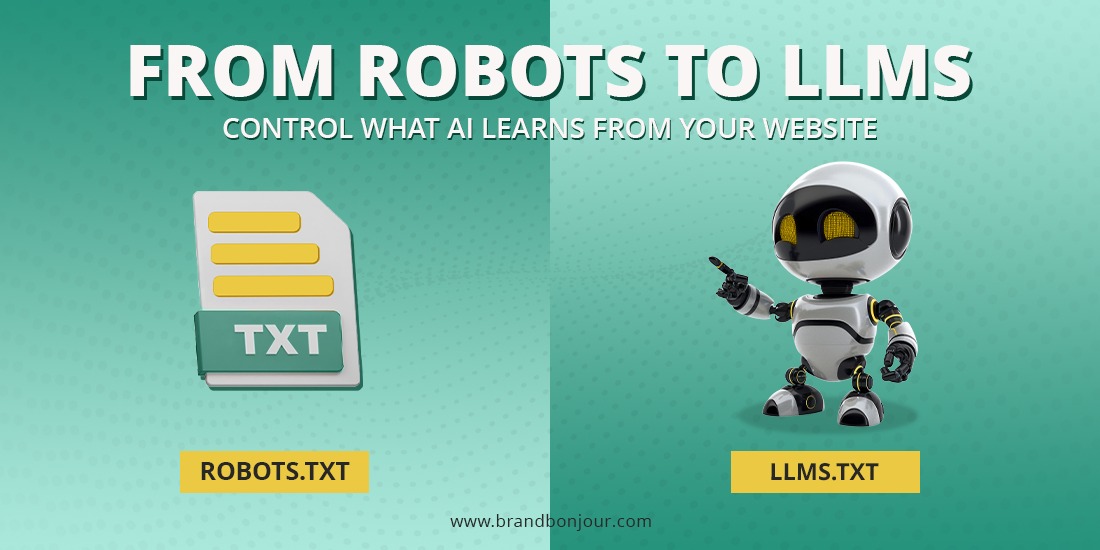

In the evolving digital landscape, controlling how machines interact with your website has become more crucial than ever. Traditionally, we’ve relied on robots.txt to guide search engine crawlers. But with the rise of AI and Large Language Models (LLMs), a new standard called LLMs.txt is emerging.

Robots.txt – The Old Guard

- Introduced in 1994.

- Primarily designed for search engines like Google, Bing, and Yahoo.

- Defines which parts of a website can or cannot be crawled.

- Focused on indexing control for ranking in SERPs.

LLMs.txt – The New Player

- A modern approach to govern AI models like ChatGPT, Gemini, or Claude.

- Helps website owners decide if their content can be used for AI training or not.

- Extends beyond search – it’s about content usage and ownership in the AI age.

- Ensures data transparency and prevents unauthorized scraping for AI datasets.

Quick Comparison

| Feature | Robots.txt | LLMs.txt |

|---|---|---|

| Launched | 1994 | 2024 (emerging standard) |

| Purpose | Control crawler indexing | Control AI model data usage |

| Audience | Search engines | Large Language Models (LLMs) |

| Focus | SEO rankings & visibility | Content ownership & ethical AI training |

Why You Should Care

If you want visibility on Google, you optimize robots.txt. But if you care about how AI platforms use your data, LLMs.txt is your shield. Both files are becoming complementary tools in protecting and promoting your brand online.

👉 Want to know if your website is optimized for SEO, AI, and conversions?

Take our Free Marketing Audit and discover untapped growth opportunities:

🔗 Get Your Free Marketing Audit